Jump into the dynamic future of design with us

We’ve been keeping an open mind as Artificial Intelligence sweeps the world, from chatGPT, DALL.E, mind-blowing deepfakes, and now Sora: text to video in 10 seconds. But how does AI integrate into studio life on a practical level? What does it look like when a machine makes art? From conceptual illustration to day-to-day tasks of the studio, can AI benefit and improve the design process?

We’ve always embraced automation in streamlining the repetitive and mundane, from Photoshop actions to cascading style sheets in InDesign. But this feels different, it’s a new dawn of ‘baked-in’ Artifical Intelligence, systems you can communicate with directly, pushing further into ‘traditional design skills’ territory.

As automation streamlined the mundane, AI takes it further, freeing us to focus on the tasks we love the most, conceptualisation and ideation. It’s early days, but we’ve found Adobe Photoshop’s AI brain for filling backgrounds, extending scenes and generating additional content promising – taking ‘Content Aware’ to the next level. Adobe’s Illustrator AI tool for generating vector graphics also shows huge potential to extend everyone’s skillset into what is traditionally the reserve of the illustrator. We’re also using chatGPT to start thoughts, confirm rationales and inform communications and descriptions – where it feels appropriate.

I sat down with our Creative Director, Dan Clark who recently explored the potential of DALL.E by openAI, to create a series of illustrations. We’ve documented our experience below and are sharing our thoughts. We’d love to hear your thoughts too, are you sitting on the side lines, toeing the line or or diving deep with AI? Please find us on LinkedIn, and let’s continue the conversation.

In this symbiotic relationship between human ingenuity and Artificial Intelligence, limitless creative possibilities are unfolding, shaping a dynamic future for design, heralding a transformative era.

The Process

We’ve been on a journey… this is where DALL.E took us:

We had a few false starts…

Creatik: “Generate an image of lots of kangaroos in the style of the Beatles album sergeant peppers money hearts club band.”

Dall.E: Error creating image.

“I'm unable to generate an image based on your request due to content policy restrictions. If you have any other ideas or themes you'd like to explore, feel free to share them, and I'll be happy to help create something new for you!”

Creatik: “Generate an image of lots of kangaroos in the style of the artist Peter Blake.”

Dall.E: Error creating image.

“I'm unable to generate an image based on your request due to content policy restrictions. If you have another idea or theme you'd like to explore, please let me know, and I'll do my best to assist you within the guidelines.”

At this point, we refresh ourselves on the content policy and try again.

Creatik: “Generate an image of lots of kangaroos in a style of the sex pistols artwork.” Note: we triggered a text classifying warning, assuming ‘sex’ and ‘pistols’ flagged it. We had to re-agree to the terms to continue.

Creatik: “Generate an image of lots of kangaroos, the kangaroos are all wearing hats and carrying umbrellas, the style of the image is lino cut illustration.”

Creatik: “Extend the image to the left to show a wallaby sitting in a deck chair.”

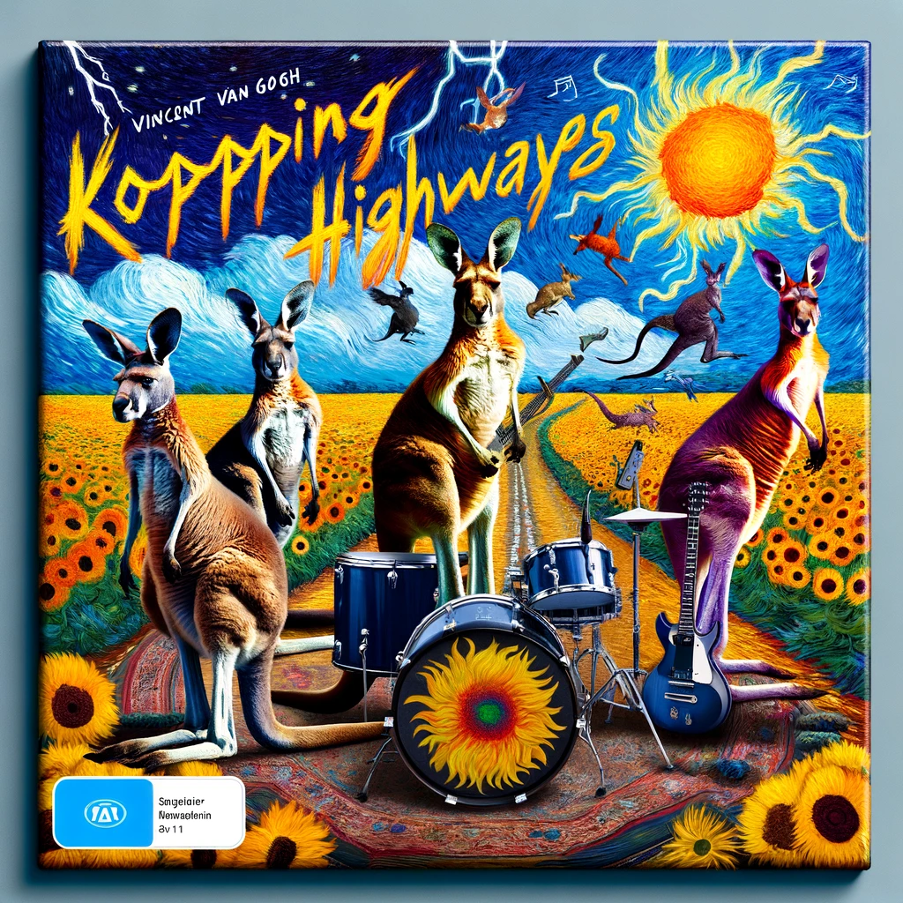

Creatik: “This looks great, please now change the image to the style of Van Gogh sunflowers.”

Creatik: “Using this image create an album cover for an imaginary kangaroo rock band.”

Now we want to push the limits and see the computers capability to iterate and “roll-out” the idea in context.”

Creatik: “Create some merchandise to promote the band using this image style.”

Let’s look at the learnings…

1. Unpredictability

The first noticeable take away is unpredictability, I find us gathering around the screen like a poker table, waiting for the outcome, with each text prompt we enter – what will we see, will it be a pleasant surprise? A very different process from our usual approach of a sketched thumbail then working-up ideas on the Mac.

We achieved the results above in about 30 minutes. (*Noting that to illustrate or source and edit any of these images would have taken us a considerable amount of studio time.) While the results come quick, it’s at the expense of a definitive outcome, and the confidence of knowing what you are going to get.

The gamble isn’t exactly bad, it’s just an unknown and therefore a small risk factor. There are limitations in terms of the coherence and realism of the generated content. Sometimes, the generated images don’t fully align with the intended description, or they may contain artifacts that make them look unrealistic or nonsensical. It’s unpredictable and surprising. Sometimes a pleasant surprise, and sometimes a kangaroo with a tail for a head.

2. Your relationship with the machine generating the AI

Design is instinctive, nuanced and relational, created for people, by people, so there is much to be said about attempting to ‘converse’ with an inanimate object. Design outcomes are intrinsically woven into time, place, culture, context, emotions, and communicating this lived experience in a brief few sentences is a tall order. As humans we can make assumptions, join the dots and fill the missing space in a brief. Communication will always live in the duality of context and comprehension. Four key nuances for the humans talking with machine piece:

a. Results are driven by your relationship with the machine, this will vary from person to person. We keyboard mashed a few directives before we understood the machines comprehension and the restrictions. Perseverance is important, as well as an ability to say the same thing a variety of ways.

b. The result is only as good as the machine’s reference for what you are describing. The internet is full of things to draw from, but machines are still yet to learn how to connect different people, places, and things out of context, to create new meaning. Creating AI artwork is still quite linear and literal – you get what you ask for, with a large margin.

c. We found issues with terminology, specifically terminology in context in an alternative experiment. Motif, logo, brand mark, word mark, icon, device. All different words we use to describe similar things. AI struggled to delineate between these in the context of graphic design.

d. AI models are trained on datasets, and the biases present in these datasets can manifest in the generated content. This can lead to issues like stereotyping, lack of diversity, or unintended associations in the generated images.

3. Design is an iterative process.

Whilst AI is unpredictable, I can make a case for the imagination and abstract thinking of the machine, purely in relation to composition. The images we created are fun, playful and feel like something new has been created. They follow some of the principles of design – balance, proportion, scale, hierarchy etc. Yet, currently the system is not easily capable of iterative design – having provided a usable composition (with some tweaks) a simple request to change a colour, or remove a detail results in an different new image. The ability to reproduce the same image with small changes, based on qualitative feedback is lacking. Noting this, and after some further research we discovered the need to request the ‘seed image’ that the compostion is based upon.

For example:

Prompt: Create an image of a cockatoo sitting on a garden fence

Chat GPT Provides an image

Question: What is the seed for this image?

ChatGPT: The seed for this image of the cockatoo sitting on a garden fence is 4060328353.

Prompt: Modify the image with seed 4060328353: Change the fence to metal railings

Chat GPT Provides a second image, largely similar to the original in colour and composition, but also still a very different image.

It’s often the feedback process, which is context sensitive, requires emotional depth and outside of the box thinking that AI is yet to grasp.

On the plus side, because the process isn’t iterative, I think originality or singularity rather, can be assumed. I’d feel confident that what I created was a one off and couldn’t be replicated, but begs the question: is it far enough away from its original reference point?

4. Borrowing vs taking, Copyright and IP.

Intellectual property rights surrounding AI-generated artwork often reside in a legal grey area, raising complex questions about ownership and authorship. While AI algorithms autonomously produce the artwork, humans orchestrate and guide the process. Consequently, legal ownership may hinge on factors such as the extent of human involvement, the ownership of the AI system, and contractual agreements. Resolving these issues requires clear legal frameworks that balance the contributions of both AI and humans, ensuring fair recognition and protection of intellectual property rights in the evolving landscape of AI-generated creations. In our specific experiment we noted too many similarities between Van Goghs original Sunflowers and our AI generated image. Which caused an internal discussion on borrowing ideas vs taking them or straight up knocking them off. We also faced a copyright warning when we referenced ‘The Beatles’, but not when we referenced ‘The Sex Pistols,’ but we faced a text classifying warning when we referenced The Sex Pistols.

It's important to note that there are ethical and moral judgements and obligations that must lie with humans when guiding and signing off the content that is generated. While the rules of engagement require you to agree to the policies of the generating platform you are using, we know law makers are in the slip stream behind AI, while the world delves into a foray of possibilities. Design decisions must involve ethical considerations that require human judgment and moral reasoning, which AI currently lacks.

Design decisions must involve human judgment and moral reasoning.

Design decisions must involve human judgment and moral reasoning.

5. File size & file type.

This is a biggie in delving into the creative potential of machine generated art and imagery. The file size of the images generated by DALL.E or similar models depends on various factors such as the resolution of the image, the complexity of the generated content, and the specifics of the implementation. Generally, generating very high-resolution images are computationally intensive and therefore not feasible.

In our case, the images we generated averaged 430kb, consistent with standard digital/online imagery file size and resolution outputs. Our images were generated as .WebP files. WebP is an image format developed by Google that employs both lossy and lossless compression techniques, offering smaller file sizes compared to JPEG and PNG formats while maintaining image quality. The question remains around how these images would be used outside of digital applications, as the file size is small and digital centric. WebP files may need conversion to more traditional printing formats like JPEG or TIFF since they're primarily optimized for web use, lacking broader support in printing workflows.

Conclusion

While AI extends the boundaries of artistic creation, human artists remain essential for imbuing artworks with depth, meaning, and the ineffable qualities that define human experience. Collaborations between humans and machines, leveraging the strengths of both, may represent the most promising path forward in the realm of AI-generated artworks.

In this era of innovation, embracing AI as a creative partner promises to redefine art's landscape, challenging perceptions, and inspiring new narratives. So, let us know if you are up for experimenting with AI generated image content in your next brief. The parameters are set, but the creative potential is enticing. As creators, we’re embarking on an odyssey where imagination knows no bounds, and the journey itself will be the masterpiece.

Adelle Chang

Business and Design Director